In 2020, the rise of XR extension technology has brought about a new revolution in the film and television industry. Until now, LED virtual production based on LED background wall has become a hot topic in the industry. The combination of XR (Extend Reality) technology and LED display has built a bridge between virtual and reality, and has made great achievements in the field of virtual film and television production.

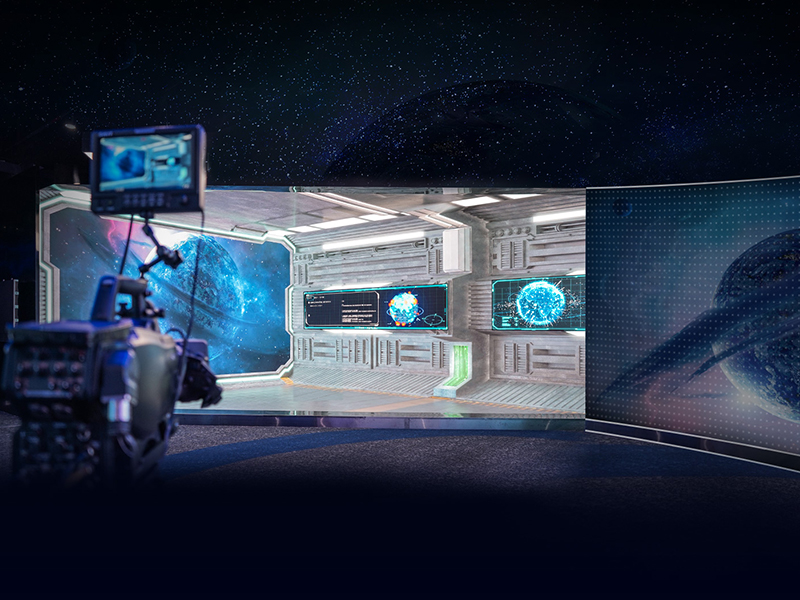

What is LED studio virtual production? LED Studio Virtual Production is a comprehensive solution, tool and approach. We define LED virtual production as "real-time digital production". In actual use, LED virtual production can be divided into two types of applications: "VP studio" and "XR extended studio".

VP studio is a new type of film and television shooting method. More used for filming and TV series. It enables film and television producers to replace green screens with LED screens and display real-time backgrounds and visual effects directly on the set. The advantages of VP studio shooting can be reflected in many aspects: 1. The shooting space is free, and the shooting of various scenes can be completed in the indoor studio. Whether it is a forest, grassland, snow-capped mountains, it can be created in real time using the rendering engine, which greatly reduces the cost of framing and shooting.

2. The whole production process is simplified. "What you see is what you get", during the shooting process, the producer can view the desired shot on the screen in time. Scene content and narrative space can be modified and adjusted in real time. Greatly improve the efficiency of changing scenery and changing scenery.

3.Immersion of the performance space. Actors can perform in the immersive space and experience it directly. The actor's performance is more real and natural. At the same time, the light source of the LED display provides real light and shadow effects and delicate color performance lighting for the scene, and the shooting effect is more realistic and perfect, which greatly improves the overall quality of the film.

4.Shorten the return on investment cycle. Compared with the traditional time-consuming and labor-intensive film shooting process, virtual shooting production is highly efficient and the cycle is greatly reduced. The release of the film can be realized faster, the remuneration of the actors and the expenses of the staff can be saved, and the shooting cost can be greatly reduced. This virtual production of movies based on LED background walls is considered a huge development in film production, providing a new impetus for the future of the film and television industry.

XR extended shooting refers to the use of visual interaction technology. Through the production server, the real and the virtual are combined, and the LED display screen is used to create a virtual environment for human-computer interaction. Brings the "immersion" of seamless transition between the virtual world and the real world to the audience. The XR Extended Studio can be used for live webcasts, live TV broadcasts, virtual concerts, virtual evening parties and commercial shooting. XR extended studio shooting can expand virtual content beyond the LED stage, superimpose virtual and reality in real time, and enhance the audience's sense of visual impact and artistic creativity. Let content creators create infinite possibilities in a limited space and pursue endless visual experience.

In the virtual production of LED studio, the entire shooting process of "VP Studio" and "XR Extended Studio" is roughly the same, which is divided into four parts: pre-preparation, pre-production, on-site production, and post-production.

The biggest difference between VP film and television production and traditional film production methods lies in the changes in the process, and the most important feature is "post-preparation". VP film and television production moves the 3D asset production and other links in traditional visual effect films before the actual filming of the film. The virtual content produced in the pre-production can be directly used for the in-camera visual effects shooting, while the post-production links such as rendering and synthesis are moved to the shooting site, and the composite picture is completed in real time, which greatly reduces the workload of post-production and improves the production efficiency. In the early stages of video shooting, VFX artists use real-time rendering engines and virtual production systems to create 3D digital assets. Next, use the seamless splicing LED display with high display performance as the background wall to build an LED stage in the studio. The pre-production 3D rendering scene is loaded onto the LED background wall through the XR virtual server to create an immersive virtual scene with high picture quality. Then use accurate camera tracking system and object position tracking and positioning technology to track and shoot the object. After the final shooting is completed, the captured material is sent back to the XR virtual server through a specific protocol (Free-D) for viewing and editing.

The steps for an XR stretch shot are roughly the same as for a VP studio shot. But usually in a VP studio shot the entire shot is captured in-camera without the need for expansion. In the XR extension studio, because of the particularity of the extension of the picture, there are more links to expand the "background" picture in the post-production. After the shot material is sent back to the XR virtual server, it is necessary to extend the scene to the outer cone and screenless area through the method of image overlay, and integrate the real scene with the virtual position. Achieve more realistic and immersive background effects. Then through color calibration, positioning correction and other technologies to achieve the unity of the inside and outside of the screen, and finally output the expanded overall picture. In the background of the director system, you can see and output the completed virtual scene. On the basis of extended reality, XR extended shooting can also add motion capture sensors to achieve the interactive effect of AR tracking. Performers can interact with virtual elements in three-dimensional space instantly and unrestrictedly on stage.

ED studio virtual production is a fusion of technologies. The required hardware and software equipment includes LED display, virtual engine, camera tracking system and virtual production system. Only through the perfect integration of these hardware and software systems, can fantastic and cool visual effects be captured and the final effect be achieved. Although the LED display of the XR extension studio has a small construction area, it needs low-latency features to support live broadcasts, requires greater data transmission and real-time interaction, and requires a system with stronger performance to support real-time image processing. VP studio LED construction area is large, but because there is no need for screen expansion, the system requirements are relatively low, but high-quality image shooting is required, and the configuration of other equipment such as virtual engines and cameras must reach professional level.

The infrastructure that connects the physical stage with the virtual scene. Highly integrated LED display hardware, control system, content rendering engine and camera tracking. The XR virtual production server is the core of the virtual shooting workflow. It is responsible for accessing the camera tracking system + virtual production content + real-time images captured by cameras, outputting virtual content to the LED wall, and outputting the synthesized XR video images to the director station for live broadcast and storage. The most common virtual production systems are: Disguise, Hecoos.

The rendering engine of video production is the performer of various latest graphics technologies. The pictures, scenes, color effects, etc. seen by the audience are directly controlled by the engine. The realization of these effects includes many rendering techniques: ray tracing - image pixels are calculated by particles of light; path tracing - rays are reflected back to the viewport Calculations; Photon Mapping - Light source emits "photons" calculations; Radiosity - Lighting paths reflected from scattering surfaces into the camera. The most common rendering engines are: Unreal Engine, Unity3D, Notch, Maya, 3D MAX.

LED studio virtual production is a new scenario for large-screen display applications. It is a new market derived from the continuous development of the LED small-pitch market and the continuous improvement of the technical level of LED display equipment. Compared with the traditional LED screen application, the virtual LED display screen has more accurate color reproduction, dynamic high refresh, dynamic high brightness, dynamic high contrast, wide viewing angle without color shift, high-quality picture display, etc. stringent requirements.

Post time: Oct-19-2022